This page contains questions and answers from my talks about the CUE studies. For more questions and answers, see Questions and answers about usability testing.

Overview of this page

- Are five users enough to find 75% of the usability problems?

- CUE-10: Evaluating your evaluation skills

- CUE-10: Other questions

Are five users enough to find 75% of the usability problems?

QUESTION: Why are you saying that five users are not enough?

When I do a usability test, the number of usability problems decreases considerably after the first 5-10 usability test participants. This seems to match the statement that 5 users are enough to find 75% of the usability problems.

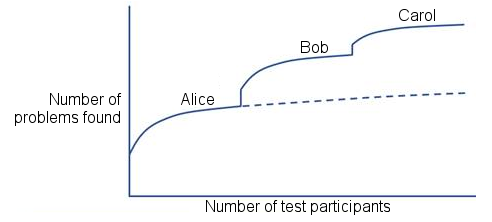

ANSWER: Five users are indeed enough to find 75% of the usability problems that can be found by a specific moderator who uses a specific set of tasks. If you change the tasks or the moderator, new usability problems will invariably be found. The following figure drawn by my colleague Bernard Rummel illustrates this:

The figure shows that Alice finds, for example, 30 problems with her task set and five test participants. If Alice had continued testing with, say, 10 more test participants, chances are that she would have found approximately 10 more problems as the curve for Alice shows. Bob then independently tests the same website with five other users. The CUE-studies show that approximately 10 of Bob’s findings will be problems that Alice found and 20 will be new problems. Similarly, Carol rediscovers approximately 15 problems found by Alice and Bob, and finds 15 new problems. And so on. In CUE-4, where 17 teams participated, even the last team found new, valid problems.

QUESTION: Number of test participants required to find 75% of the problems.

You say that hundreds of usability test participants are required to find 75% of the usability problems in any non-trivial website. 75% of what? Compelling, deal breaking issues for most user segments? Or the gamut of problems, some trivial?

ANSWER: Most of the problems that were reported by only a few UX professionals in the CUE studies were indeed trivial or minor. But in some of our studies with 15 or more participants, for example CUE-4, we saw several problems that were reported by just one UX professional, who considered the problem “major” or “critical”.

Any non-trivial web site has hundreds of usability problems. In CUE-4, 17 participants reported more than 300 problems. If you consider the full range of tasks and user groups, for example, experienced users might yield a trove of usability problems inexperienced users wouldn’t encounter. Also, it might take hundreds of test participants to go through the different paths of a complex web site to find a majority of the usability problems.

QUESTION: How do you know what all the problems are or when you’ve found 75% of them?

ANSWER: We don’t know what all the problems are. We never conducted a CUE study where we could reliably estimate the total number of usability problems based on our data. In several of our CUE studies, more than 300 problems were reported. Almost all reports contained unique findings of which some were “major” or “critical”. Chances are that if we had continued testing with more moderators, new tasks and different classes of users, many more usability problems would have been found. In CUE-4, where 17 teams participated, all teams reported valid problems that were not reported by any other team.

QUESTION: If the reviewers are all seeing different things within the same set of sessions, is it really about the number of different moderators OR is it about the number of people doing review and analysis?

ANSWER: Please read our CUE-9 article, which studied the so-called evaluator effect. The evaluator effect names the observation that usability evaluators who analyze the same usability test sessions often identify substantially different sets of usability problems, even when the evaluators are experienced.

Even though we don’t know how many usability problems there are on a non-trivial website, we know that the number is huge. Given that there are hundreds of usability problems and you shouldn’t report more than 20 to 30 in a usable usability test report, it is not surprising that different moderators report different usability problems. An interesting result from CUE-9 is that even very experienced evaluators report different problems.

CUE-10: Evaluating your evaluation skills

QUESTION: Would you recommend a UX team do evaluations similar to CUE-10 internally on their own moderation? It’s something I’ve done once and found extremely valuable, but curious if you have any experience repeating this type of meta-evaluation to help teams improve on an ongoing basis.

ANSWER: Yes, I recommend that you evaluate your usability evaluation skills regularly to keep sharp. The same applies for many other UX skills, in particular interviewing.

Good: Watch videos of yourself moderating or interviewing.

Better: Ask your colleagues to observe you while you moderate or interview and comment on your performance.

Best: Ask a neutral, recognized expert to observe you while you moderate or interview and comment on your performance. The CPUX-UT certification examination includes an assessment of your abilities to moderate and report the results of a usability test. The checklist for the practical part of the CPUX-UT certification examination is freely available, even if you don’t want to do the certification examination.

QUESTION: What should I look for when I review my test materials and my own video of a session or the video of a colleague?

ANSWER: I recommend that you use the checklist for the practical part of the CPUX-UT certification examination. The checklist has about 100 items. It is based on experience from experts and evaluation of more than 100 usability test reports.

The checklist is freely available even if you don’t want to do the certification examination.

QUESTION: How should I give feedback to a colleague who wants to become a better moderator?

ANSWER: It depends on how willing your colleague is to listen to criticism.

- Provide a bit of feedback first. See how your colleague reacts. If they change their behavior, provide further advice. If they say “thank you” but continue as before, providing further advice is probably a waste of your precious time.

- Express your criticism diplomatically and constructively. Criticize the moderation, not the moderator.

- Remember to praise, that is, point out the things that your colleague does well. 25 to 50% of your feedback should be praise.

- Don’t just focus on moderation. Also consider the communication of the usability test results, for example, in a usability test report. Is the usability test report usable for all the stakeholders who receive it?

CUE-10: Other questions

QUESTION: How did moderation “errors” affect the number or quality of usability issues the moderators found?

SHORT ANSWER: We did not see any glaring examples of serious or critical usability issues that were overlooked because of moderation errors.

LONG ANSWER: Moderation errors, such as poor time management, caused some teams to skip tasks; usability issues associated with skipped tasks were neither found nor reported.

Reporting the usability issues found was not a focus point in CUE-10. As a consequence, several teams who were short of time only wrote a superficial usability test report, which made the reported usability issues hard to compare (I tried!).

In CUE-10, like in all other CUE studies, some serious or critical issues were not reported by some teams. We do not know if this is because the moderator overlooked the issue, possibly because of a moderation error, or because the moderator decided that the issue was less important than other usability issues which they reported.

Except for CUE-3, which focused on usability inspection, I do not recall having seen any false problems (false positives) in any CUE study, that is, reported usability problems that neutral experts would not have accepted as valid usability problems. In CUE-10 and in other CUE studies, I have seen descriptions of usability issues that suffered from quality problems, for example, hazy, hard to understand or not actionable.

QUESTION: Re: time management. Were moderators given guidelines for how long each task should take?

ANSWER: No. In the CUE-studies, we did not want to give experienced UX professionals detailed guidelines on how to do a usability test. We did impose a time limit of 40 minutes on individual sessions for practicality and consistency. We deliberately chose six usability test tasks that might be challenging to get through within 40 minutes to study how moderators managed time.

QUESTION: When deciding to end a task – how do you avoid making the participant feel bad for not succeeding?

ANSWER: Put a note in the briefing that you might end a task early to make sure you get to all the tasks. For more advice on this important topic, please see the CUE-10 article, section “Complimenting Test Participants.”

QUESTION: How do you break a participant out of a long monologue…some like to talk a LOT.

ANSWER: See the examples in the CUE-10 article, section “Managing Time,” “Stopped a task when the usability problem was clear to the moderator.” We did see a few examples of test participants who spoke extensively without being interrupted by the moderator.

Personally, I have had some success stopping a protracted monologue by saying,

“Thank you for your helpful suggestions. Please move on with the task” or

“We’ll stop here since we’ve learned what we can about this task and move on to the next task.”